Potatolemon - Planar Classification

Previously on Potatolemon…

- Logistic Activation Function

- Neuron Weights

- Layers and Networks

- Losses

- Backpropagation (Part 1)

- Backpropagation (Part 2)

Previously on potatolemon, we finished off deriving the formulas behind backpropagation and implemented it all, and then we did a lot of testing and a lot of bug hunting, and then we validated our library by building a network to solve a small toy problem (learning the XOR gate).

Planar Classification

Since we now have ostensibly a working library, it’s time to put it to the test with a further toy problem to learn!

So, in this post, we will look at a planar classification example which further tests our network in a binary classification setting.

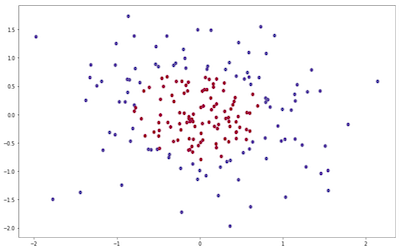

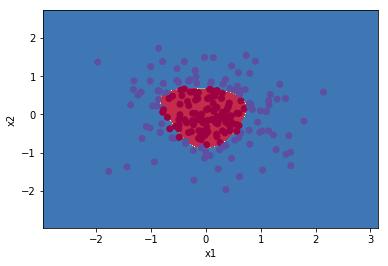

The problem we will use is to try and classify gaussian quantiles apart from one another. When plotted on a 2d plane, here is what our classification problem looks like - how can we draw a decision boundary so as to separate the red and blue dots?

Again, this is a non-linear problem (similar to the XOR problem we faced last time), and so requires at least one hidden layer to solve. In this case, we just use a network with one hidden layer (that’s 5 Neurons wide), to see if we can learn to classify these points.

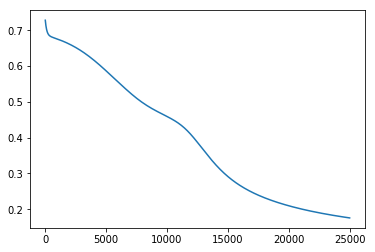

We first use our reference library (pyTorch) to train a network.

After training, we get the following results:

This looks pretty good - the boundary separates clearly the two classes, and the final accuracy for this model is 97%. We could probably improve this by increasing the size of our network if we wanted to.

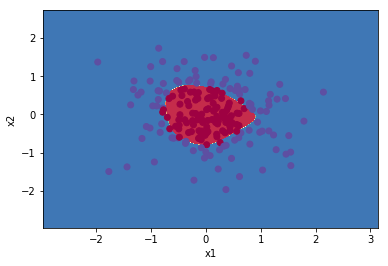

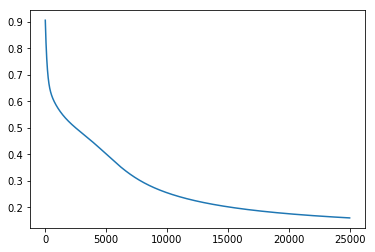

Using potatolemon, we see the following training results:

This results in a very similar boundary.

The overall accuracy for our potatolemon network is 96%, so, fairly close performance wise (yet still different). Where do these differences come from? There are probably several sources of error, from the initialisation of the weights (which are drawn randomly from uniform distributions) to implementation details of the forward and backwards passes themselves (as even very minor numerical precision differences can produce large outcome differences over the course of many epochs). Also, let’s not discount the fact that there still might be an elusive bug somewhere in potatolemon ;)

Aside from the result accuracy, the network took nearly twice as long as the pyTorch example to train. This is quite a large performance difference given the small size of the network where you would expect that the vectorisation and parallelism offered by pyTorch would not make such a huge difference.

These two minor points aside, the results of this test by and large are another validation of our network and the fact that it has been implemented correctly! Huzzah!

In the next post, we’ll look at multiclass classification and see how well our network handles that!

The code for the tests above can be found at: https://github.com/qichaozhao/potatolemon/blob/master/examples/planar_classification.ipynb

All the rest of the code can be found on the github project: https://github.com/qichaozhao/potatolemon

Peace out for now!

-qz