Trying to train an LLM to talk to my wife

Over the past few months, I’ve used LLMs (GPT-4 mainly) to help me create an Android App (see: https://qichaozhao.github.io/gpt4-app/), and also as the backend for an app itself (see: https://qichaozhao.github.io/mingxing-app/).

Now, we’ll take the LLM exploration one step further and look at how we can fine-tune an LLM model for a specific task more than just prompting.

The task at hand? To produce a conversational agent capable of impersonating me to respond to my wife’s texts. Now, the main motivation for this use case is not because I don’t like texting wifey (I do <3), but rather that it provided a more unique and interesting challenge than just “take X model and fine-tune on Y dataset”.

Methodology

When I started looking into this project, Mistral’s 7-billion parameter model had just been released, so I decided that would be a good baseline model to use. On top of this model I wanted to explore two main methodologies:

- Prompting LLMs with Retrieval Augmented Generation (RAG)

- Fine-Tuning

I will give a layman’s overview of both later on in the relevant sections. My own expectations were that RAG would perhaps provide only marginal benefits, but fine-tuning had the possibility of being very effective.

Aside from the text generation method, a big part of any ML or AI project is how we evaluate effectiveness.

For this project, there were a few different approaches I ended up using.

- ROUGE score

- GPT as Judge Evaluation

- Human Evaluation (thanks to my wife)

I’ll also go into more depth on how I set up the evaluation approaches when we talk about the results.

The Dataset

So, in order to train or prompt an LLM to respond like me to my wife, we need a usable dataset of said responses as examples. Luckily (or rather, by design), I have just the dataset for this purpose - our WeChat message logs (for those who are not aware, WeChat is the primary texting app in China - much like WhatsApp).

Extracting messages from WeChat was nowhere near as simple as I was expecting it to be. For those interested, these are the steps needed for an Android device:

- Root your phone

- Get the encrypted WeChat message database (called EnMicroMsg.db), this is an SQLCipher encrypted database

- Derive the encryption key using your IMEI and the Wechat UID (which can be found in the WeChat config files)

- Dump out the messages using SQL

Luckily, I’m not the first person to want to do this, and there are some excellent available open-source tools for this:

Unfortunately though, they didn’t work out of the box for me owing to how Android ADB treats root shell access now compared to how it was a few years ago. I didn’t want to root my main phone (because it would mean wiping the data), so I fished out an old Android phone I had lying around to do this on.

The main modification I had to make to wechat-dump’s decrypt-db.py script was to change how it called shell commands through adb.

As an example:

Original script call:

out = subproc_succ(f"adb shell cat {MM_DIR}/shared_prefs/system_config_prefs.xml")

Modified call:

out = subproc_succ(f'adb shell su -c "cat {MM_DIR}/shared_prefs/system_config_prefs.xml"')

So, after transferring the chat logs between my wife and I to the old phone and getting the script running, I was able to dump out all of our message history from the last 5 years into a text file! A good first step!

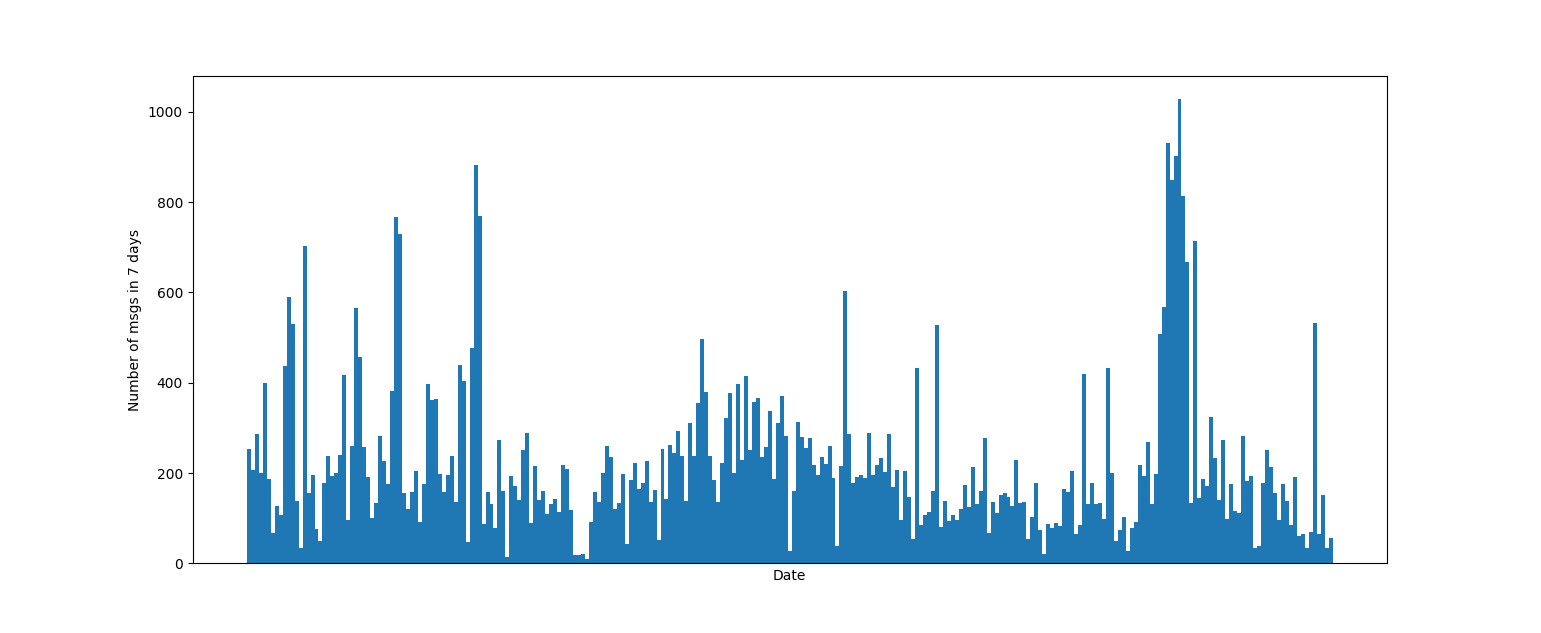

So, what are some takeaways from this dataset?

I plotted our weekly messages count over time. It’s definitely not as stable of a distribution as I was expecting, but on further examination it makes sense, as the peaks correspond with times when one or both of us were travelling separately, and the troughs correspond with holiday periods.

In total over the ~5 year period this dataset spans, we exchanged 84873 messages! Is that a lot? I don’t know…maybe other data scientists with partners and messaging logs can also share their stats.

What are our most used words? The top 3: haha, happy, lunch

Our most used emojis? The top 3: [Chuckle], [Facepalm] and [Grin]

Reading through our message history, as expected from the top 3 vocabs and emojis, it is mostly light hearted and positive. In fact, some of it is downright saccharine, so I won’t be sharing too many raw examples - I have an image to maintain after all! :P

As I’m sure with any couple over time, we have developed our own language “code”, so to speak, which we only use with each other. This includes unique vocabulary, as well as non-standard grammar and in-jokes. Over time (as my Chinese has improved) we have also gravitated to send a mixture of Chinese and English in our messages. In my opinion, this makes for a good challenge for an LLM generation exercise, as if we could get an LLM to capture these specific nuances of our communication style and also facts about us, then that would be a pretty good achievement (especially for a 7-billion parameter model).

To pre-process this data into a more suitable format for LLM training or prompt usage, I first performed some message aggregation; both me and my wife (but me to a much greater extent) are somewhat guilty of sending multiple short messages as part of one longer reponse. Then, I concatenated messages and responses that occurred within a 5 minute window together to build a longer conversational context.

So, at the end of it all, I had a dataset with message-reply pairs, and up to 5 previous message-reply pairs which occurred within 5 minutes of each other to provide as much context as possible.

Generation Approaches

As discussed above, the two main approaches I wanted to explore in this project was LLM prompting with Retrieval Augmented Generation (RAG) and Fine-tuning. However, to set a baseline for comparison, I first started with just prompting the LLM to see how well I could make it perform.

Standard Prompting

After playing around with various different prompts, the one which I settled on was a combination of 3 things:

- Providing facts and background information for me and my wife.

- Providing instruction on how I communicate in terms of style with my wife.

- Providing hand picked samples (I limited it to 3) of what I thought were representative examples of how we texted.

Although I won’t be sharing the exact prompt (because it does contain a bunch of personal data), it looks something like this:

Here are some facts about me: {facts}

Here are some facts about my wife: {facts}

Here is a description of my communication style with my wife: {description}

Here are some examples of messages between us: {examples}

Given the above, act as me to respond to the following message from my wife: {conversation context}

Given the above, here are a few cherry-picked examples of the results it was able to generate:

Example 1:

Message (from wife to me): How about a Friday bike ride to have some food? Somewhere we can see our bikes.

---

LLM: That sounds like fun! Where would be your favorite spot to go on a Friday afternoon bike ride that allows us both to enjoy beautiful scenery while eating delicious meals together?

---

Reference (my actual response): sure, but where to? do you have somewhere in mind?

In this case, and many cases like this, the sentiment that the LLM expressed was not too far off my own. However, it’s easy to see there is a big stylistic difference in the reponses. I was hopeful that RAG and/or fine-tuning would be able to address this.

Example 2:

Wife: They say everyone who enters the terminals which doenst mean everyone who gets on the plane.

Me: yep.

Wife: I'm sure for taking the flight, you need it.

Me: i think i'll book two tests at a hospital. or something. the normal 核酸点 is shared tubes. which is too risky now.

Wife: ah okay. Maybe find one hospital to test first and see how long does it take for them to get you a result. They are normally slower than the testing area.

---

LLM: Ill check if there any hospitals that offer rapid covid19 pcr test near by. If yes, ill go ahead and schedule an appointment. Thanks again for your help babe :)

---

Reference: yeah...dunno if the one next to us has it. will call them later.

In cases like this, again the response seems reasonable to a certain degree. However, it would frequently use nicknames such as ‘honey’ or ‘babe’ in the responses, something which I never say. Even with an example provided in the prompt the LLM still didn’t pick up the correct nicknames to use.

I was again hopeful that RAG and/or fine-tuning would be able to address this.

Example 3:

Wife: Yep haha. Shall we continue to watch ep10?

---

LLM: Sure thing! Let's keep going on our climb together 😊

---

Reference: Anything you wanted clarifying?

In quite a few cases, especially when the preceding conversation context was short or contained Chinese terms, the LLM would completely misunderstand the context and say something a bit nonsensical. I wasn’t sure if examples like this would be improved through fine-tuning or RAG, as it seems to be more linked to just not having enough context in the conversational history to generate a plausible response.

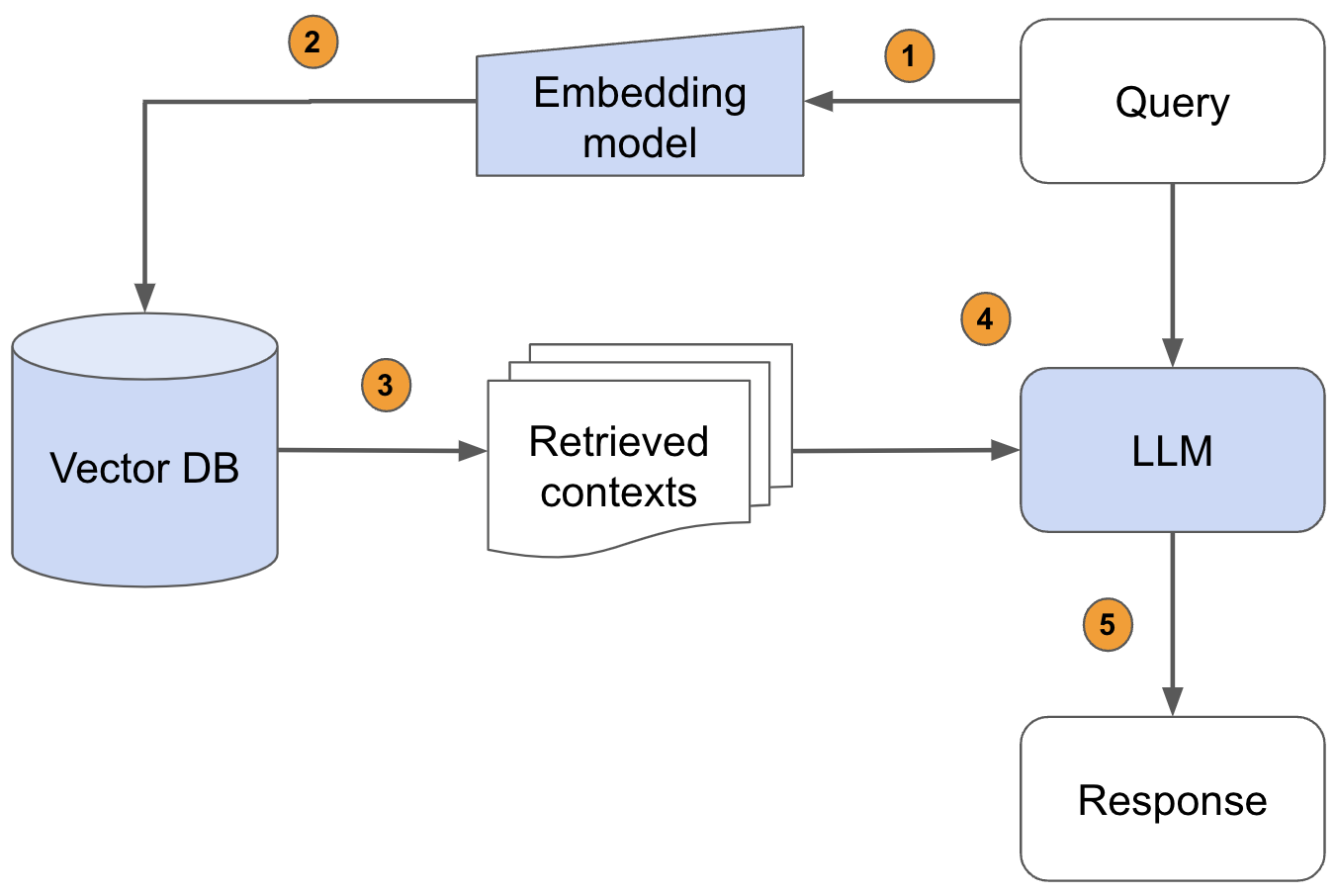

Prompting with RAG

So, what exactly is Retrieval Augmented Generation? Well in simple terms it is all about providing more relevant context in the prompt in order to help the LLM generate better results.

The way that RAG aims to provide this relevant context is by using aspects of the initial prompt to search relevant information in a database store, and then using the results (which in theory should be relevant) to augment the prompt with additional information that the LLM can then use to complete the prompt instruction.

In more concrete terms for our particular use case, I used Vector DB (ChromaDB for those interested), and stored embeddings for each message with the reply as additional metadata.

This gives us a store of messages which we can lookup, and when the LLM is prompted with a certain message, the idea is that it can use this to look up similar messages that I may have received in the past and provide examples of responses to reference against.

In this way, the final prompt to the LLM would be modified from basic prompting to be of the below form:

Here are some facts about me: {facts}

Here are some facts about my wife: {facts}

Here is a description of my communication style with my wife: {description}

Here are some examples of messages between us similar to the one you will be shown below: {RAG retrieved examples}

Given the above, act as me to respond to the following message from my wife: {conversation context}

This I think is only one way to use RAG in this kind of conversational agent, I could also imagine that RAG would be a great way to provide relevant facts based on keywords in the prompt that would give better context for the LLM to generate a response. However, such a fact database would have been quite consuming to generate and so it was not something I had time to explore in this project.

So, qualitatively evaluating the results, it didn’t really appear that RAG made a huge difference over plain prompting in many cases. However, for the 3rd example above, the LLM was able to determine that we were watching episodes correctly due to the additional information.

Example 1:

Wife: Yep haha. Shall we continue to watch ep10?

---

LLM: Sure thing! Let's keep going on our climb together 😊

---

LLM (RAG): Sure thing, let's keep watching episode 10 together!

---

Reference: Anything you wanted clarifying?

Obviously, it was still pretty far from the reference response, but definitely more believable than before.

However, other cases did not fare as well:

Example 2:

Message: still at work?

---

LLM: Yes, still here working on some data analysis tasks.

---

LLM (RAG): Yes, sorry honey! Just finishing up some tasks before heading home.

---

Reference: Yeah. Nearly done though. Leaving in 3 minutes!

We can see here that it is still making nickname mistakes. And Example 1 from before became worse.

Example 3:

Message: How about a Friday bike ride to have some food? Somewhere we can see our bikes.

---

LLM: That sounds like fun! Where would be your favorite spot to go on a Friday afternoon bike ride that allows us both to enjoy beautiful scenery while eating delicious meals together?

---

LLM (RAG): Sure thing! Let's plan a fun Friday evening together by taking a leisurely bike ride around town while enjoying delicious cuisine at one of our favorite spots. We should definitely check if any local parks or trails offer scenic routes that would allow us to enjoy both activities simultaneously. What do you think she means when saying "someplace where we can see our bikes"?

---

Reference: sure, but where to? do you have somewhere in mind?

And finally, with the RAG respones there was one other issue - it started copying and pasting previous responses from the conversation as it’s current response. For example:

Example 4:

Wife: No table booked. they dont' take reservation.

Me: oh oh. well then i'll leave asap. get us a table.

Wife: haha i'm sure judging by the weather, there wouldn't be too many people.

Me: It's Friday night.

Wife: Yeah but still i think it should be fine.

Me: lets see :D.

Wife: Oh maybe you shouldn't take your bike? Gonna pick up the car later right?

Me: i should.

Wife: Maybe you should take my bike.

Me: i cycle to the car, drive, tomorrow when i return the car i cycle back.

Wife: Then i'll take a taxi back with momo. Ah hahh okay.

---

LLM (RAG): oh oh. well then i'll leave asap. get us a table

If you’ll notice, the RAG response is directly copied from my first response in the conversation context.

To resolve this problem, there was probably additional prompt engineering I could have done, but it was not something I explored in too much further detail as I felt that fine-tuning would probably provide overall better results.

Prompting a Fine-tuned Model

In LLM parlance, there are two distinct phases of training a model: pre-training, which is the from scratch training of the model parameters that takes a lot of compute and time and vast amounts of data that represent text from across the internet. Then there is fine-tuning, which uses a smaller specialised dataset to make changes only to a subset of the model parameters, and requires far less time and resources to accomplish.

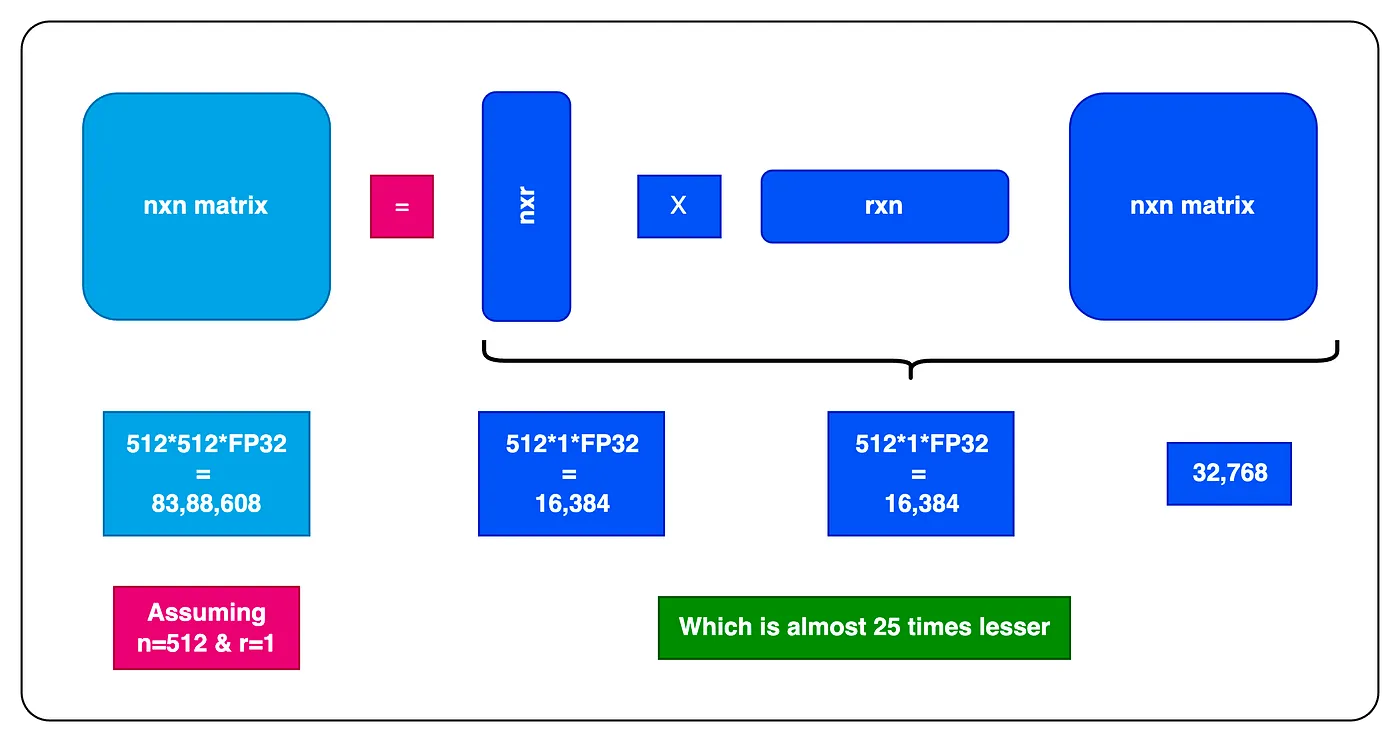

Techniques called Parameter Efficient Fine Tuning (PEFT), namely LoRA (Low Rank Adaptation) and qLoRA (Quantized Low Rank Adaptation), allow consumer grade GPUs to fine-tune models in a reasonable time-frame.

The intuition behind LoRA and qLoRA is as follows:

- LoRA: Instead of updating parameter weights for the full model (7 billion in Mistral’s case), we instead learn the weights for a smaller matrix or “Adapter” that is inserted as the final stage of the base model. With the base model’s parameters frozen, it takes far less compute and data to learn a good adapter.

- qLoRA: Introduces quantization of the model parameters, which is a memory saving measure (the main bottleneck for training LLMs being vRAM available or the time it takes to compute). This converts the standard 32-bit floating point representation for parameter weights into less memory hogging floating point types (e.g. 16-bit, 8-bit or 4-bit), trading off the ability to represent more precise weights.

We can see from this diagram a low rank (rank = 1) decomposition of a 512x512 matrix (which can be considered the base model output), into a 512x1 matrix and a 1x512 matrix.

This works because a 512x1 matrix multiplied by a 1x512 matrix “recovers” a 512x512 matrix, but the actual number of parameters in the low rank matrices end up being far less (262144 vs 1024).

If we wanted to increase the fidelity of the decomposition (e.g. if we have extra memory or time available), we can increase the rank of the decomposing matrices and train more parameters.

Practically, what this means is that to fine-tune this model I can actually run it on a Google colab instance using a V100 GPU with 16GB of vRAM, just as well as it’s basically impossible to get allocated A100 GPUs right now.

So then it was just a case of preparing the fine-tuning data into the format required by Mistral (see: https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1)

For conversations with just one message-reply pair, it looked as follows:

<s>[INST] i can order from my side. [/INST]

ok, please do the needful. and revert.</s>

For conversations with multiple back and forths:

<s>[INST] Can we go bouldering tomorrow and play badminton on Sunday? [/INST]

Dunno if I can boulder with my back this way. But let's see how it is. In theory no problem!</s>

[INST] aww. Are you going to gym today? [/INST]

Yes, I'm there now but just some time on the treadmill, no weights.</s>

There are a few Mistral fine-tuning guides around, and most of the code is also re-usable from fine-tuning Llama guides. I found the following two helpful as a step-by-step walkthrough with code examples:

- https://gathnex.medium.com/mistral-7b-fine-tuning-a-step-by-step-guide-52122cdbeca8

- https://adithyask.medium.com/a-beginners-guide-to-fine-tuning-mistral-7b-instruct-model-0f39647b20fe

But putting the whole script together, understanding the components and so on still required a bit more Googling along with reading of the Huggingface docs, and, it has to be said a bit of trial and error.

The most time consuming part for me was not actually the fine-tuning, which on Google Colab took only an hour or so for an epoch, but rather the difficulty came when I wanted to merge the LoRA adapter with the base model to create a separate quantized (4-bit) model to push to huggingface. The reason why I wanted to do this was so that I could then use this model on my local machine (which only has a piddly RTX 3070 with 8GB vRAM) to perform inference with.

Well, long story short this plan of mine didn’t pan out in the end. Firstly because it actually takes way more vRAM to quantize a model than training a LoRA, and secondly I found that after quantizing the model to 4-bit the quality of the outputs really became terrible.

In the end I decided to pay for more Google Colab compute credits and carried out all the inference testing with as little quantization as possible (I ended up using FP16 in the end).

So, how well did the fine tuned model perform based on my own qualitative observations?

The good: it definitely picked up and consistently deployed a language code that felt similar to my communication style. For example, it deployed the right nicknames, speech patterns and also mixed Chinese with English.

The bad: it did so in such an over the top way that the responses became a caricature of how I would normally respond (in my opinion). For example, I use emojis in ~5% of my messages, but the fine-tuned model has inserted them in ~25% of its responses, and often it’s usually many emoji strung together. It also excessively uses some of my own frequently used language (like haha), and has also clued into my style of messaging in that there are lots of short sentences.

When prompting the fine-tuned model for output, I had to include as part of the prompt to answer maturely and concisely, without excessive use of emojis. But I think unfortunately the model has overfit to the point that even these instructions in the prompt have limited effect, so that responses often contain big chains of things like xxx, <3, [Heart], [Kisses]

And while I’m definitely not averse to sending similar things over text and obviously do it with enough frequency such that the fine-tuning has picked up on it, I definitely don’t do it to anywhere near the degree that the fine-tuned output suggests.

Example 1:

Wife: still at work?

---

LLM: Yes, still here working on some data analysis tasks.

---

LLM (RAG): Yes, sorry honey! Just finishing up some tasks before heading home.

---

LLM (FT): Yep. Just got home haha. Are you gonna be here soon tooo? Hahha

---

Reference: Yeah. Nearly done though. Leaving in 3 minutes!

In this example we can see some of what is mentioned above (short sentences, overuse of haha, cutesy lengthening of too), but we can also see that the FT-model’s response doesn’t make sense either (yes I’m at work, I just got home…). This though may be more a limitation of 7B models to be coherent rather than anything to do with the fine-tuning.

Quantitative Evaluation

By this point, we now have a good feel of the kind of results that each approach has managed to produce. But, we still need a quantitative way to evaluate them against each other.

ROUGE Score

The most basic approach is a classic NLP Metric. Essentially it measures the percentage of n-grams (where n is user defined) that appear in both the reference text and the generation candidate. This is to say, if the LLM generated response can have more words that overlap with my actual response, then it will obtain a better ROUGE score. Here is how my 3 approaches fared:

| Prompt | Prompt (RAG) | Fine-Tuned | |

|---|---|---|---|

| ROUGE1 Score | 0.039 | 0.057 | 0.047 |

Based on this, it appears the RAG approach actually performed the best. But, in this case ROUGE is probably not the best measure of how similar a response is to my own response, as a response can feel similar without having much word overlap at all so long as the right language code is being employed.

So, onto the next evaluation method:

GPT as Judge

Since there is some amount of subjective judgement in what actually makes two responses similar, I decided to ask OpenAI’s GPT3.5-turbo to give similarity scores for my actual response and the 3 candidate responses. I used the following prompt:

You are an assistant which evaluates stylistic similarity between different pieces of text. Given an input as follows:

{

"reference": "this is the text to compare the other texts against",

"result_rag": "this is the first candidate",

"result": "this is the second candidate",

"result_ft": "this is the final candidate"

}

Evaluate the stylistic similarity of the candidate texts (in the fields result_rag, result and result_ft) against the text in the reference field, giving each a score between 0 to 100.

Return your response formatted as JSON as per the below example with the scores for each candidate in the appropriate field and explain your reasoning in the reasoning field:

{

"result_rag": score_1,

"result": score_2,

"result_ft": score_3,

"reasoning": "your reasoning"

}

Do not return any additional text outside of the JSON object.

The results are as follows:

| Prompt | Prompt (RAG) | Fine-Tuned | |

|---|---|---|---|

| GPT3.5 Mean Similarity | 57 | 51 | 38 |

What surprised me here is the low performance of the fine tuned model compared to vanilla prompting. I can only surmise that GPT’s evaluation penalised the fine tuned results especially due to their quite caricaturish and over the top nature.

Human Evaluation

So although we have some other quantitative benchmarks, this is really the only one that matters - could my wife accurately discern LLM generated responses from my real responses?

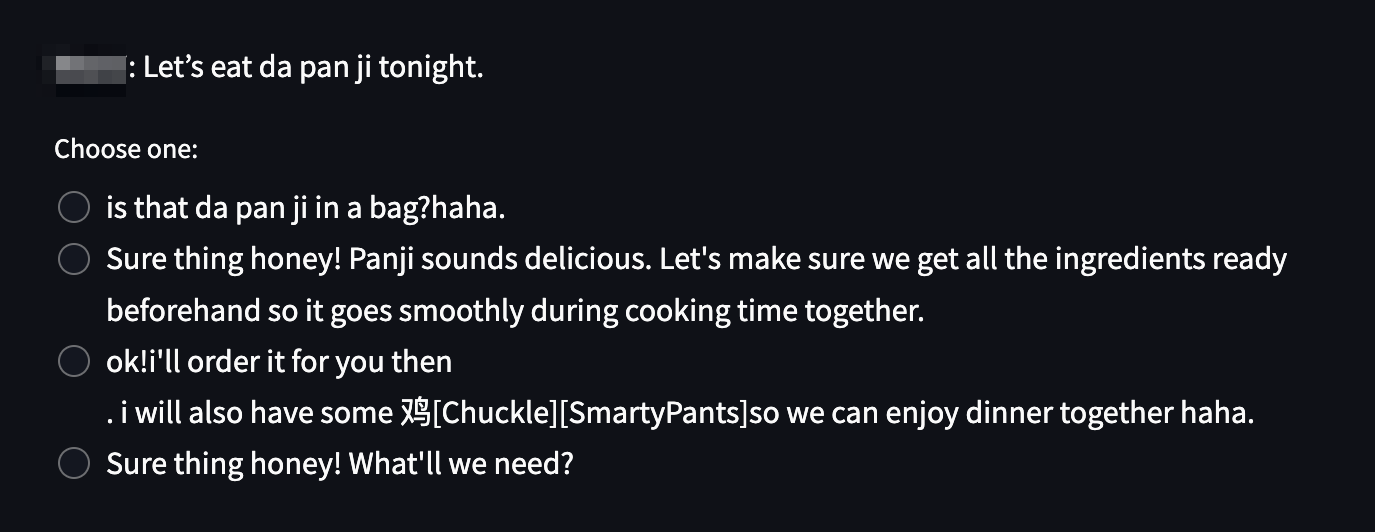

To whit, I extracted 100 random samples from a subset of the dataset where the average GPT similary score was > 50, reasoning that this would give the LLM the best chance possible, and set up a series of multiple choice questions for her using Streamlit.

One choice would be my actual response, with the other 3 corresponding to the 3 types of LLM generation. The challenge for her would be to correctly identify the real response each time.

Here were the results:

| Prompt | Prompt (RAG) | Fine-Tuned | Real Response | |

|---|---|---|---|---|

| % identified as | 1% | 6% | 3% | 90% |

So, no surprises. With the current state of the system, we are a long way off from being able to generate realistic messages. She was able to discern the my actual response from the LLM generations with a 90% accuracy rate.

Although this appears pretty bad on the surface, I actually don’t think we are too far away from a much better performing conversational agent, and I’ll talk a bit more about the reasons why in the next section.

Summary of Performance Metrics

| Prompt | Prompt (RAG) | Fine-Tuned | |

|---|---|---|---|

| ROUGE1 Score | 0.039 | 0.057 | 0.047 |

| GPT3.5 Mean Similarity | 57 | 51 | 38 |

| % identified as | 1% | 6% | 3% |

Conclusions

There are two sets of observations and conclusions from this little project, the first set being about actually working with and training LLM’s for tasks, the second about the potential of this chat agent to improve further.

Working on LLMs

I think despite the amazing capabilities of LLMs and their very distinct differentiation from other machine learning models in the public eye, working with them as a practitioner is actually very similar, especially when performing fine-tuning.

The data that the model is trained on is still of the utmost importance, and you still have to pay attention to all of the usual machine learning training details (although I didn’t bother with anything like hyperparameter tuning, just using the recommended defaults).

The additional complexity of working with an LLM comes with the prompting, for which currently I don’t believe there is a good way to optimise it as you would other “hyperparameters”, so it’s a manual process guided mainly by intuition and low sample sizes.

Finally, there’s no getting away from the fact that even training “small” LLMs requires a reasonable amount of computing resources, and while there exist methods to reduce memory footprint for training and inference, if we’re starting already with a 7B model then further quantization may make the performance unacceptable for many tasks.

The Chatbot

As for the chatbot itself, while I was a little disappointed at the quantitative results (I was aiming for a ~30% hit rate), there was actually plenty of promise and I think plenty of ways to tune the LLM for better performance.

-

For RAG (and the baseline prompting method), adjusting the prompt to give the model more specific instructions or lists of customised words to use may improve the indistinguishability of the responses. When my wife was making evaluations, one of the most useful selection heuristics for her was the presence of the word

honeyorbabe. -

For RAG, adjusting the prompt in order to avoid the model “copying” previous conversational snippets may also improve the performance noticeably, as this is also a very clear giveaway.

-

For RAG, incorporating a second type of data source for “facts” which are relevant to the conversation at hand I believe would have been a big help to the LLM to avoid a lot of the logical errors (which I think would be mainly a result of lacking context).

-

For the fine-tuned model, I believe the reason it became such a caricature of how I would normally talk is perhaps it became overfitted on the fine-tuning data. I think this could probably have been resolved by putting more effort into cleaning the data itself, and selecting fewer higher quality examples to perform the training with. After all was said and done, I had ~6000 message-response pairs to train with (and ~1700 in the test set), but we already know from other LLM fine-tuning projects that even ~1000 high quality examples can be enough to fine-tune a model, and more than that may result in over-tuning (which I guess is what has happened here).

One of the interesting things I observed with the fine-tuned model is that it would even stop being reactive to instructions given in the prompt such as:

don't use emojis excessively!(it still used emojis excessively). This behaviour became basically uncontrollable in the 4-bit quantized version of the model. This is most likely another symptom of over fine-tuning the model, and probably would not be an issue if we opted for a model with more parameters or fine-tuned for less. -

Given the performance we have observed fine-tuning or RAG alone may not be enough to truly build a convincing agent, but if we combine the two together, using RAG to provide contextual facts and then a better fine-tuned LLM to generate the right style, then that I think holds potential to greatly improve the performance.

So, hopefully this has been an interesting read into my first real LLM fine-tuning project. It was a great deal of fun, and I learned a lot, which makes this a success in my books regardless of the final score :)

Thanks also to my wife for being a willing guinea pig for these experiments!

Till next time!

-qz